Yong Liu

About Me

I am currently a PhD student (from fall, 2021) at the School of Software of Tsinghua University and a member of the THUML, advised by Prof. MingSheng Long.

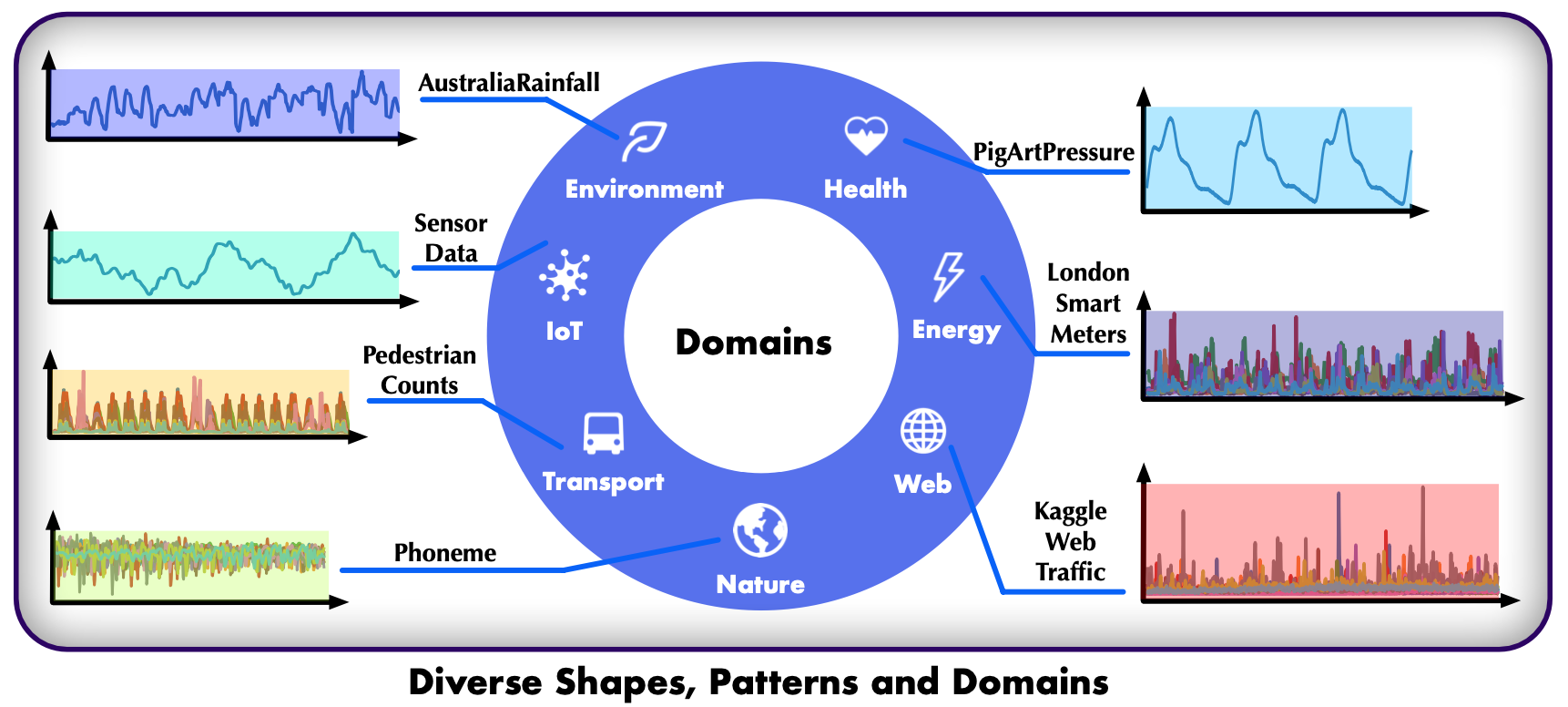

My research interests cover Deep Learning and Time Series Analysis. I am currently working on time series foundation models and MLSys for time series. In addition to pure research, I also dedicate myself to promoting research on valuable real-world applications. My research aims to contribute to the advancement of intelligent systems capable of handling massive and complicated temporal data across domains, including finance, healthcare, industry, and environment.

For more information, please take a look at my Google Scholar and GitHub.

News

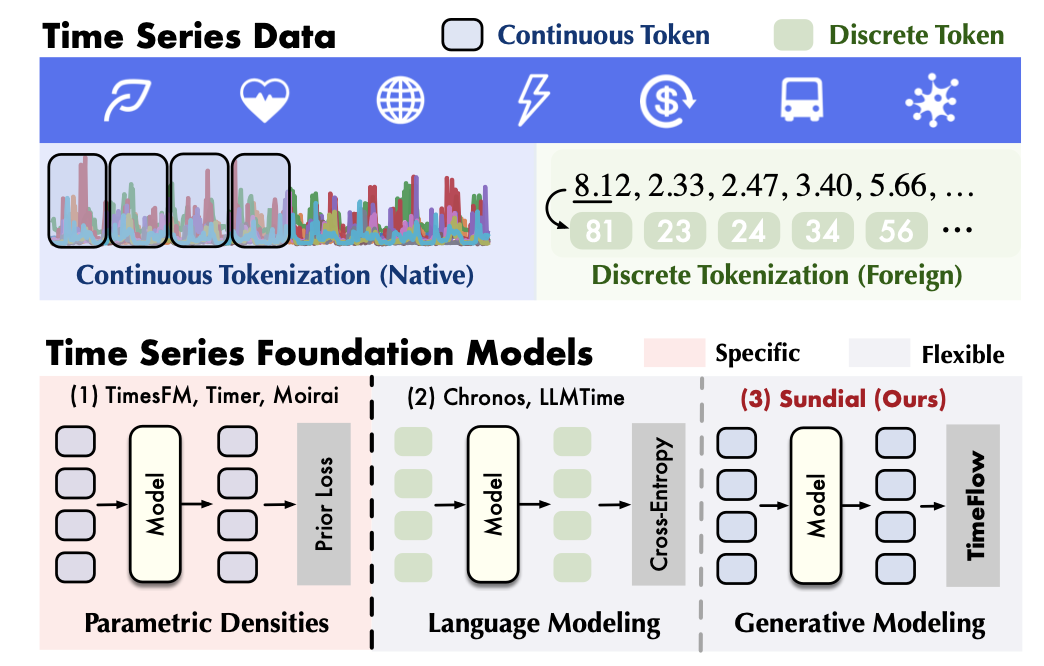

- [Jun. 2025] A trillion-scale pre-trained time series foundation model (Sundial) is released on HuggingFace.

- [Jun. 2025] A generative foundation model for time series (Sundial) was accepted as ICML 2025 Oral!

- [Mar. 2025] An open-source large time-series model (Timer) is released on HuggingFace, which supports zero-shot forecasting.

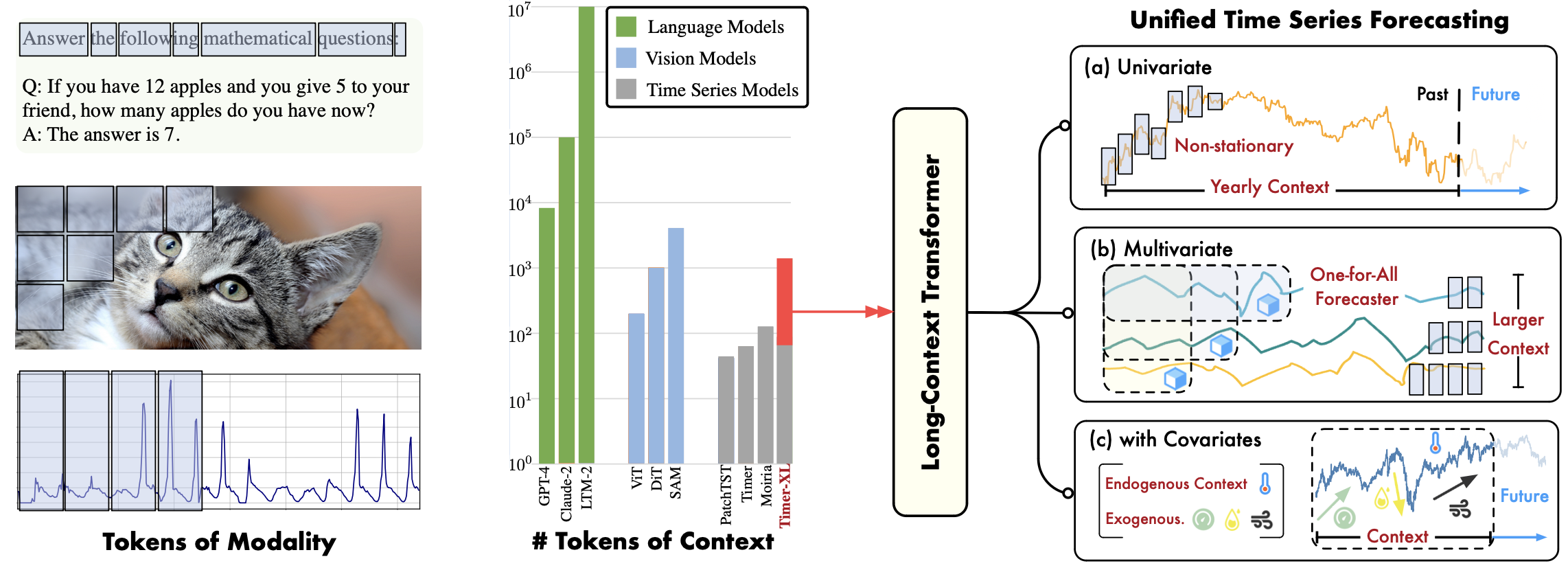

- [Jan. 2025] A long-context model for multidimensional time series (Timer-XL) was accepted in ICLR 2025.

- [Jan. 2025] iTransformer for Ant Group Green Computing are awarded as Outstanding Projects of CCF Fund.

- [Sep. 2024] Two papers (AutoTimes and TimeXer) were accepted in NeurIPS 2024.

- [Jun. 2024] A large-scale pre-trained time series model (Timer) was accepted in ICML 2024. Code is available.

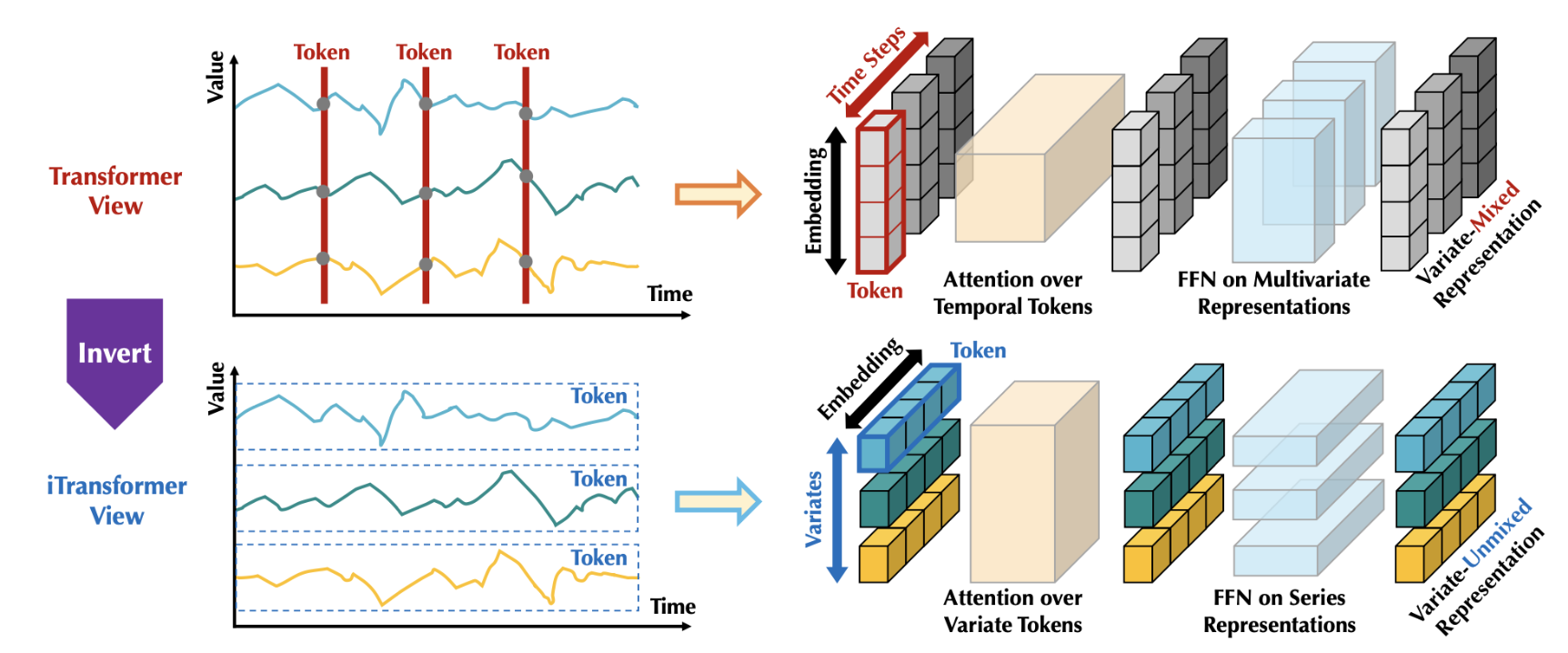

- [Jan. 2024] A Trasnformer for multivariate forecasting (iTransformer) was accepted as ICLR 2024 Spotlight!

- [Dec. 2023] Native AI analytical engine (AINode) in database (Apache IoTDB) is released!

- [Oct. 2023] A linear model for non-stationary forecasting inspired by Koopman theory (Koopa) was accepted as NeurIPS 2023.

- [Apr. 2023] TimesNet for general time series analysis was accepted as ICLR 2023.

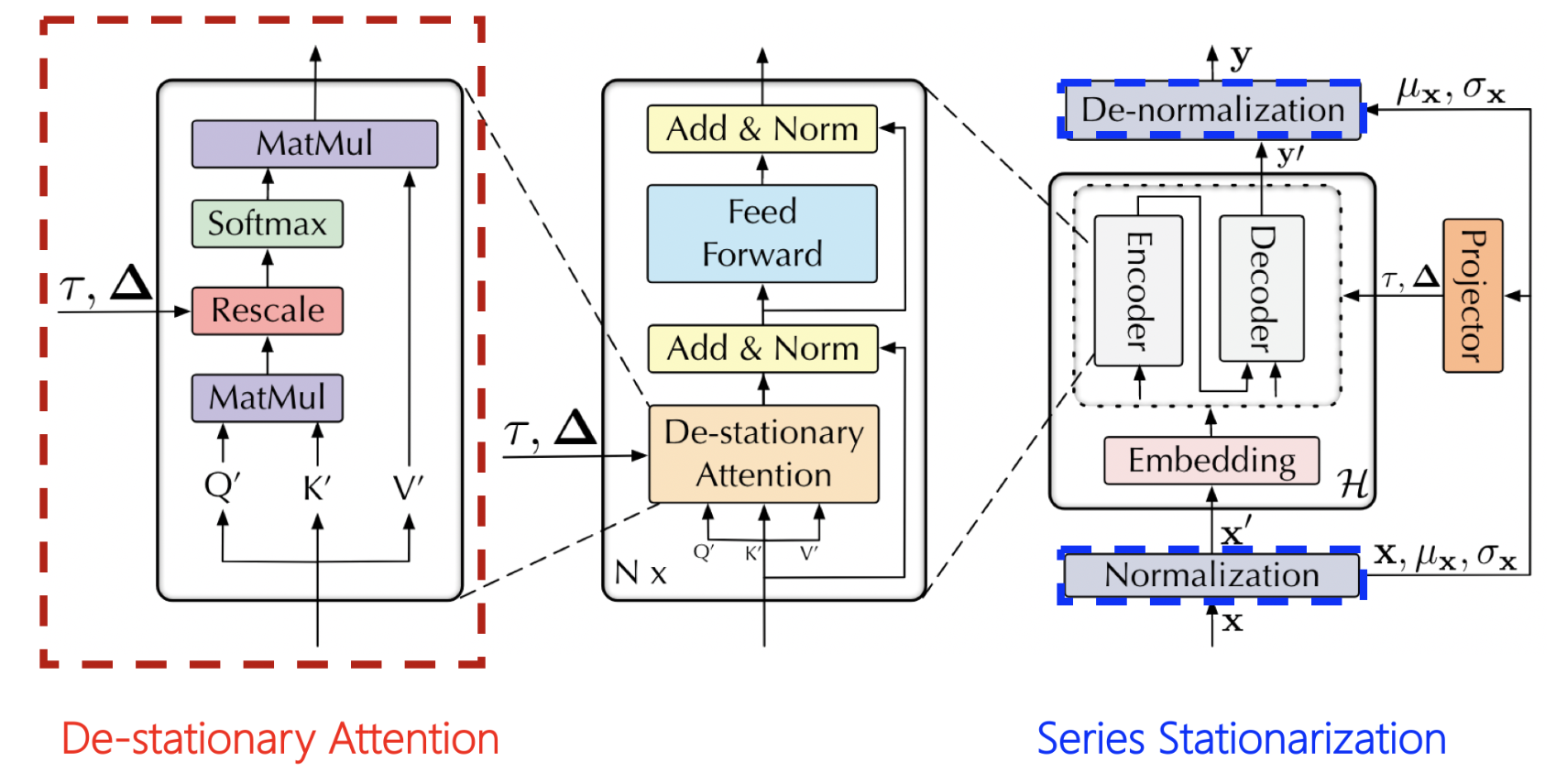

- [Oct. 2022] Non-stationary Transformer was accepted as NeurIPS 2022.

Publications & Preprints

-

ICML

International Conference on Machine Learning, 2025.

ICML

International Conference on Machine Learning, 2025. -

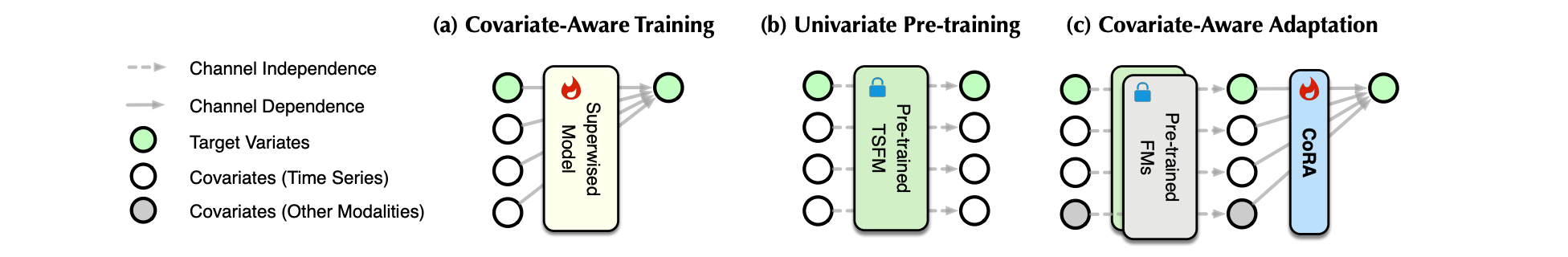

ICLR

International Conference on Learning Representations, 2024.

ICLR

International Conference on Learning Representations, 2024. -

NeurIPS

Conference on Neural Information Processing Systems, 2022.

NeurIPS

Conference on Neural Information Processing Systems, 2022. -

ICLR

International Conference on Learning Representations, 2025.

ICLR

International Conference on Learning Representations, 2025. -

NeurIPS

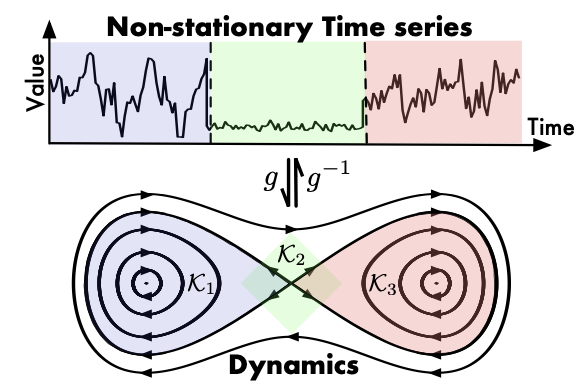

Conference on Neural Information Processing Systems, 2023.

NeurIPS

Conference on Neural Information Processing Systems, 2023. -

ICLR

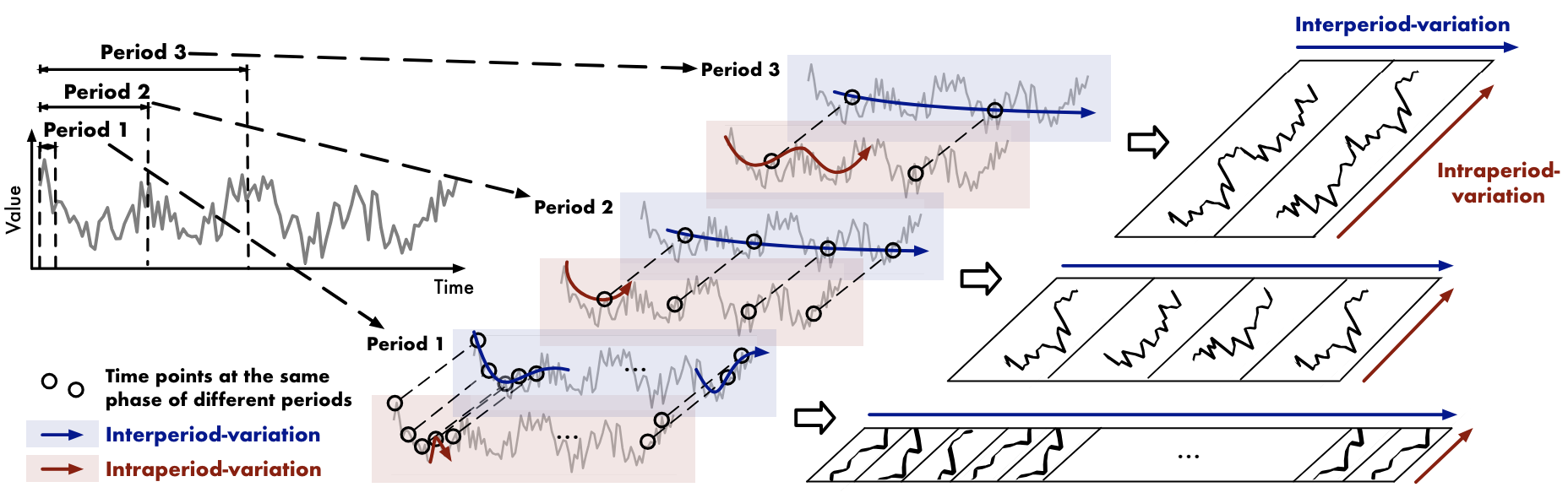

International Conference on Learning Representations, 2023.

ICLR

International Conference on Learning Representations, 2023. -

arxiv

arxiv preprintarXiv arXiv preprint, 2025

arxiv

arxiv preprintarXiv arXiv preprint, 2025 -

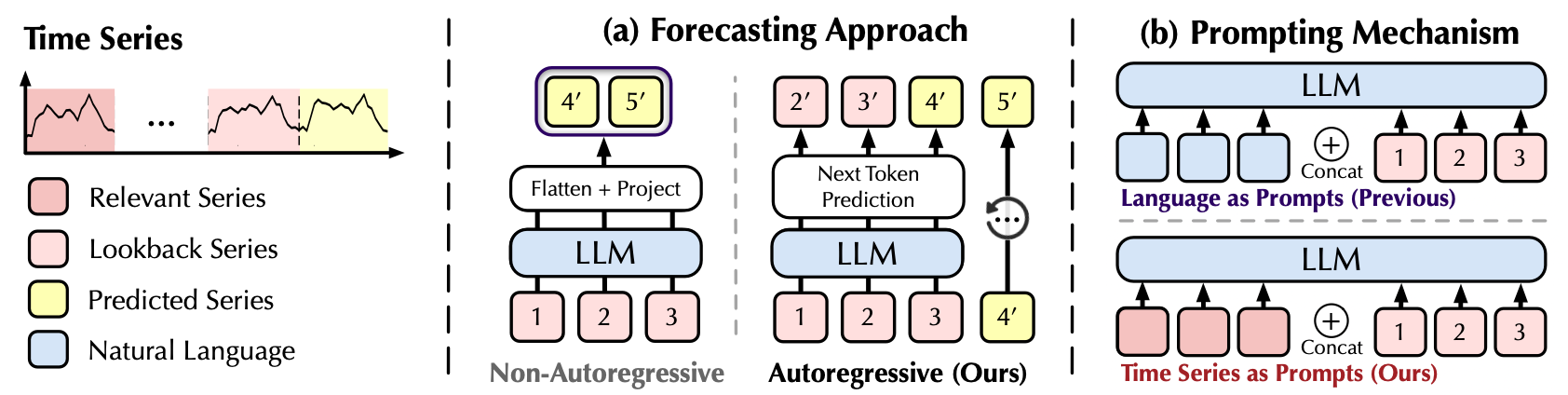

NeurIPS

Conference on Neural Information Processing Systems, 2024.

NeurIPS

Conference on Neural Information Processing Systems, 2024. -

NeurIPS

Conference on Neural Information Processing Systems, 2024.

NeurIPS

Conference on Neural Information Processing Systems, 2024. -

ACM MM

ACM International Conference on Multimedia, 2025.arXiv Accepted

ACM MM

ACM International Conference on Multimedia, 2025.arXiv Accepted -

NeurIPS

Conference on Neural Information Processing Systems, 2025.arXiv Accepted

NeurIPS

Conference on Neural Information Processing Systems, 2025.arXiv Accepted -

IJCAI

International Joint Conferences on Artificial Intelligence, 2025.arXiv Accepted

IJCAI

International Joint Conferences on Artificial Intelligence, 2025.arXiv Accepted -

arxiv

arxiv preprintarXiv arXiv preprint, 2025

arxiv

arxiv preprintarXiv arXiv preprint, 2025 -

arxiv

arxiv preprintarXiv arXiv preprint, 2025

arxiv

arxiv preprintarXiv arXiv preprint, 2025 -

JMLR

Journal of Machine Learning Research, 2022.

JMLR

Journal of Machine Learning Research, 2022. -

ICML

International Conference on Machine Learning, 2021.

ICML

International Conference on Machine Learning, 2021.

(* Equal Contribution, # Corresponding Author)

Selected Projects

HuggingFace Models

- Sundial - Generative times series foundation models (Pre-trained on 1 trillion pts).

- Timer - Large times-series models (Pre-trained on 260 billion pts).

Deep Models for Time Series

- iTransformer - Foundation Multivariate Time Series Model.

- Non-stationary Transformers - Transformers for Non-stationary Forecasting.

- Koopa - Theory-inspired efficient non-stationary time series forecaster.

- AutoTimes - LLM-based time series forecasting with texts.

Algorithms and Model Package

- Large-Time-Series-Model - Maintainer.

- OpenLTM - Maintainer.

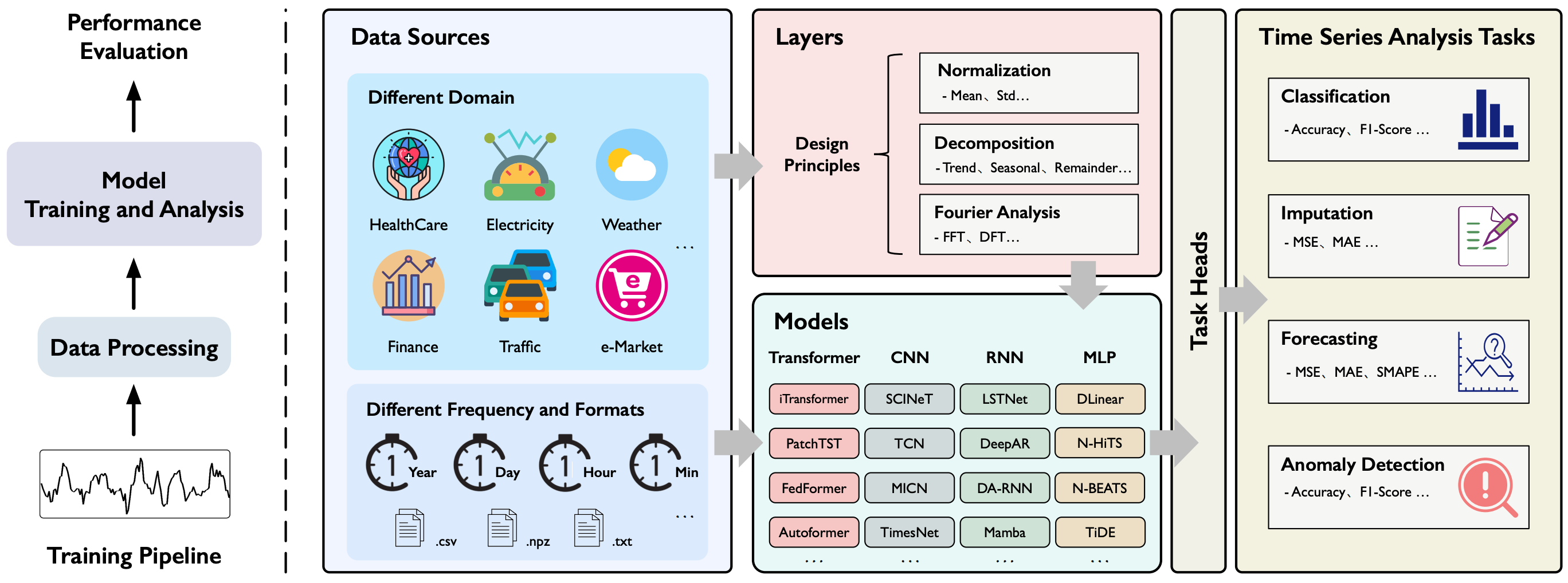

- Time Series Library - Co-Author.

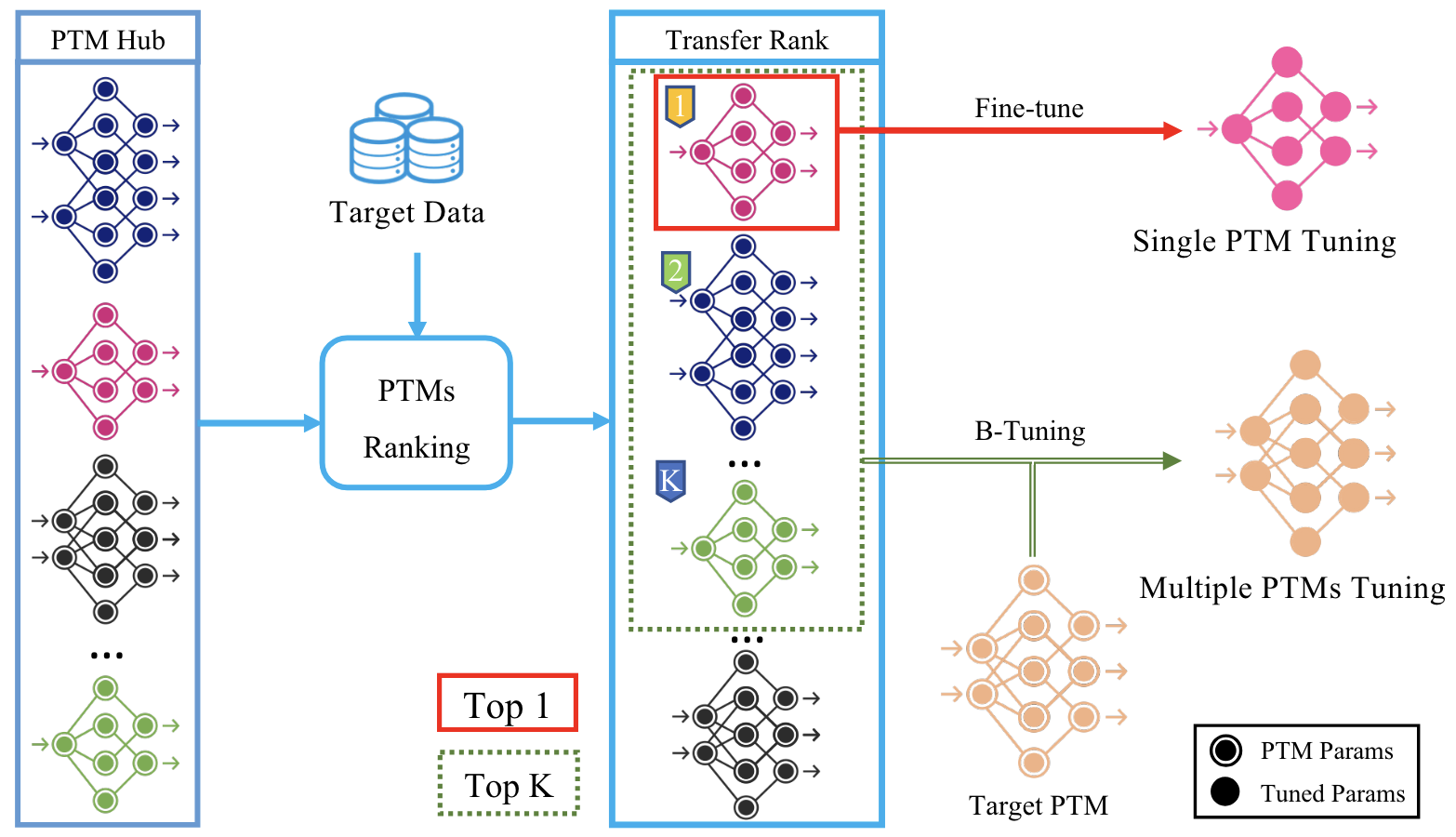

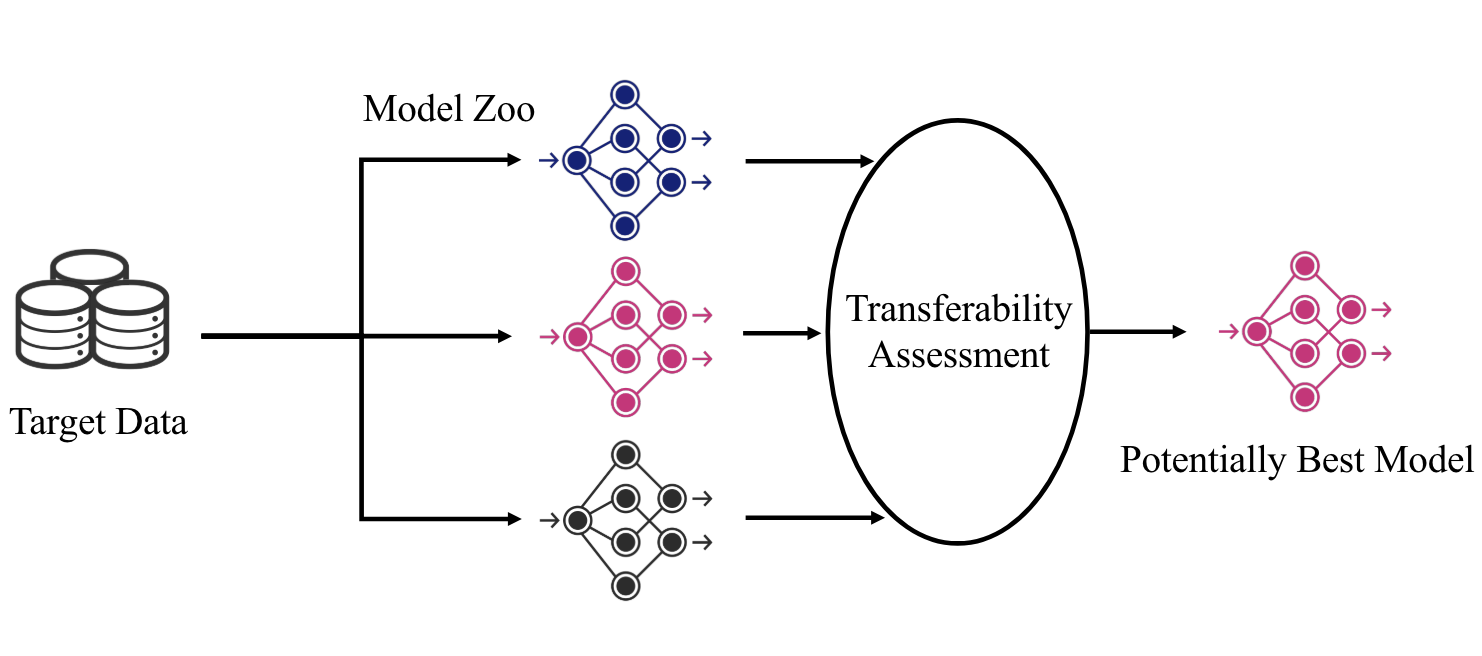

- Transfer Learning Library - Committer.

System and Applications

- Apache IoTDB - AINode: Native machine learning engine in time series database.

- Ant Group - iTransformer: Green computing of data center, developed in Ant Group.

Invited Talks

- Oral Presentation (Sundial) at ICML 2025. [Slides]

- Large Time Series Models and Resources at ISF 2025. [Slides]

- Foundation Models and MLSys for Time Series at Apache IoTDB. [Slides]

- Large Models for Native Database Analysis at TPCTC 2024. [Slides]

- Exploring Large Models for Time Series at IoA, CAS. [Slides]

- Deep Learning for Time Series Applications at DoA, THU. [Slides]

Services

Academic Services

- Conference Reviewer, International Conference on Learning Representations (ICLR) 2024-2025.

- Conference Reviewer, International Conference on Machine Learning (ICML) 2022-2025.

- Conference Reviewer, Conference on Neural Information Processing Systems (NeurIPS) 2023-2025.

- Conference Reviewer, International Conference on Machine Learning (CVPR) 2023.

- Conference Reviewer, International Conference on Very Large Databases (VLDB) 2023.

Teaching Experiences

- Teaching Assistant, Database System, Spring 2024, Prof. Jianmin Wang.

- Teaching Assistant, Machine Learning, Fall 2023, Prof. Mingsheng Long.

- Teaching Assistant, Introduction to Artificial Intelligence, Spring 2023, Prof. Mingsheng Long.

- Teaching Assistant, Deep Learning, Fall 2022, Prof. Mingsheng Long.

- Teaching Assistant, Introduction to Artificial Intelligence, Spring 2022, Prof. Mingsheng Long.

- Teaching Assistant, Machine Learning, Fall 2021, Prof. Mingsheng Long.

- Teaching Assistant, Introduction to Artificial Intelligence, Spring 2021, Prof. Mingsheng Long.

Education

- Ph.D. in Software Engineering (School of Software, Tsinghua University), 2021 to present.

- Second Degree Bachelor in Economics (School of Economics and Management, Tsinghua University), 2018 to 2021.

- Bachelor in Software Engineering (School of Software, Tsinghua University), 2021 to present, 2017 to 2021.

Honors & Awards

- National Scholarship (清华大学博士生国家奖学金), 2025.

- Outstanding Papers of Beijing (北京市优秀毕业论文), 2021.

- Outstanding Graduates of Beijing (北京市优秀毕业生), 2021.

- Excellent Graduates of Tsinghua (清华大学优秀毕业生), 2021.

- Future Researcher Scholarship of Tsinghua (清华大学未来学者奖学金), 2021.

- Tuyoo Scholarship (途游奖学金), 2024.

- Shenzhen Stock Exchange Scholarship (深交所奖学金), 2023.

- Boeing Scholarship (波音奖学金), 2020.

- Tang Lixin Scholarship (唐立新优秀奖学金), 2020.

- Jiang Nanxiang Scholarship (蒋南翔奖学金), 2019.

- Huawei Scholarship (华为奖学金), 2018.

Powered by Jekyll and Minimal Light theme.

ICML

ICML